Recap

Deterministic Games:

- States: S, (start at )

- Players: (take turns)

- Player whose turn it is to move in state

- Set of legal moves in state

- Transition Function, state resulting from taking action in state

- Terminal Test, true when game is over

- Solution for a player is a ’policy‘.

Value of a state:

- Best achievable outcome (utility) from a state.

Minimax Values

- Consider opponent in game

- Opponent attempts to minimize utility

- Agent/Player wants to maximize utility

Alpha - Beta Pruning & Tree Pruning)

Minimax Pruning

- Prune nodes that are ‘highest’, and keep minimum utility (on opponents side)

- Opponent will not let you get high utility, so no point exploring those nodes

Alpha-Beta Pruning

- When computing MIN-VALUE at some node

- Loop over ’s children

- Let be the best value that MAX can get at any choice point along current path from the root

- If becomes worse than , max will avoid it, so we can stop considering ’s other children.

- MAX version is symmetric.

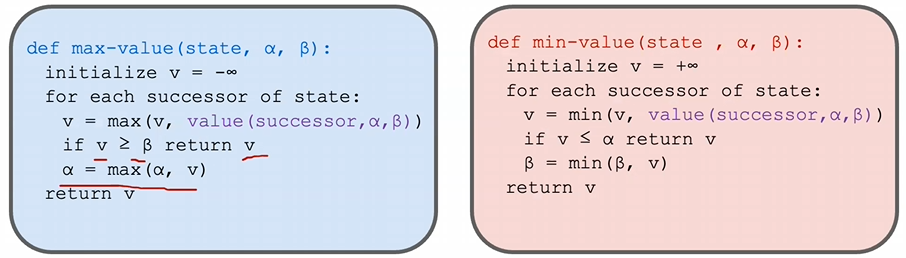

Implementation

MAX’s best option on path to root

MIN’s best option on path to root.

Basically, avoid worse branches. If the opponent can get you in a state that is of worse quality than the worst state of a better branch, there is no point exploring that branch.

Resource Limits

- Problem: In realistic games, we cannot search to the leaves

- Solution: Depth-Limited Search

- Search only a limited depth in the tree.

- Replace terminal utilities with an evaulation function for non-terminal positions.

- Guarantee of optimal play is gone.

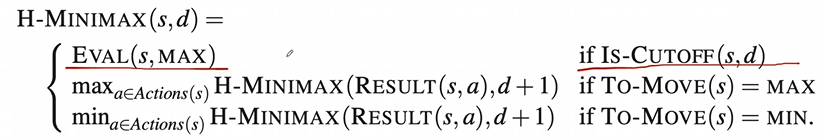

Heuristic Alpha-Beta Pruning

- Heuristic MINIMAX

- Idea: Treat nonterminal nodes as if they were terminal.

- Evaluation Function

- A cutoff test, true when stop searching.

Evaluation Functions

- Desirable Properties

- Order terminal states in same way as true ‘utility’ function

- Strongly Correlated with actual minimax value of states

- Efficient to calculate

- Typically use features — characteristics of game state that are correlated with winning.

- Evaluation function combines features to produce score:

Monte Carlo Search Tree

- Instead of using heuristic evaluation function

- Estimate value by averaging utility over several simulations

- Simulation chooses moves first for one player, than another, until a terminal position reached.

- Moves chosen randomly and according to some ‘playout policy’ (choose good moves)

- Exploration (try nodes with few playouts) vs. Exploitation (try nodes that have done well in the past)