Recap

Direct Evaluation

Temporal Difference Learning

- Big Idea: Learn from every experience using running average

- Factor in result state (running average)

Active Reinforcement Learning

Learner has to choose between exploitation and exploration

- Learn utilities for state/actions

- Compute optimal policy for current learned model

How to Explore?

- Simplest: Random actions (-greedy)

- Every time step, flip a coin

- With small probability , act randomly

- With large probability , act on current policy

- Problem?

- Will keep acting randomly even once learning is done

- Can reduce over time

- Can use exploration function

- Every time step, flip a coin

Exploration Functions

When to explore?

- Random: explore a fixed amount

- Better Idea: Explore areas whose values have not been established

- Exploration Function

- Takes a estimate and a visit count , and returns a optimistic utility (k > 0), e.g.

Q-Learning Properties

- Q-Learning converges to optimal policy even if acting sub-optimally

Regret

Even if you learn optimal policy, you will make mistakes along the way Regret: Total mistake cost. Difference between (expected) rewards including youthful suboptimality and optimal (expected) rewards.

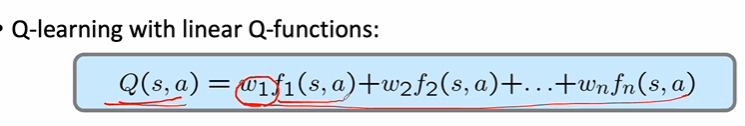

Linear Value Functions

- Can write q function for any state using few weights

- Advantage: Experience summed up in a few powerful numbers

- Disadvantage: States may share features but are different in value

Approximate Q-Learning

- Update w