Recap

Approximate Q-Learning

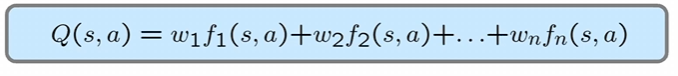

- Q-Learning with linear Q-Functions

- transition =

- difference =

Learning

- Essential for unknown environments

- Learning is useful as a system construction methods

Supervised Learning

-

Task: Learn a mappin/model from imputs to outputs

- Inputs are also called features

- Outputs are also called targets

- If y is categorical: classification model

- If y is real-valued: regression model

- has learnable parameters

-

Experience: Given in the form of input-output pairs

- Training Set D =

-

Examples

- Email spam detector

- Input: Words

- Output: Spam or not

- Classification model

- Digit Classification

- Input: image

- Output: Digits

- Classification Model

- Stock Forecasting

- Input: Price History

- Output: Future Price

- Regression Model

- Email spam detector

K-Nearest Neighbor Algorithm

- Memorize training set

- Given distance metric, find K closest sample to x, and pick average of y.

- So basically count K closest sample, and pick most frequent one.

Probabilistic Modeling

- Coin Flipping: Want to predict whether a coinflip will be a head or tail

Maximum Likelihood for Learning

- Training Set: D =

- Likelihood of D

- Assume samples are independent and identically distributed

- L(D;) =

- Maximize Maximize

- To maximize find